Improving data quality across government

Improving data quality across government using our 1Integrate rules capabilities

Author Matthew White, 1Spatial

Author Matthew White, 1Spatial

Focus on Data Quality (Data Validation)

If government are to deliver great services, create factual based policies and make good decisions, the data they use needs to be of known and good quality so they can build trust. The Government Data Quality Hub (DQHub) has been setup to develop and share best practice in data quality, providing strategic direction across government, producing guidance, delivering training and support. Data should be in the right usable structure and be effectively governed and manged by the right architectural software components. Good data quality requires people to define good quality requirements, to be rigorous in their management processes, to carefully design data process flows, to link back to data quality mission and to communicate the facts about the quality of the data both externally and internally.

For all data quality problems, it is much easier and less costly to prevent the data issue from happening in the first place, rather than relying on defending systems and ad hoc fixes to deal with data quality problems.

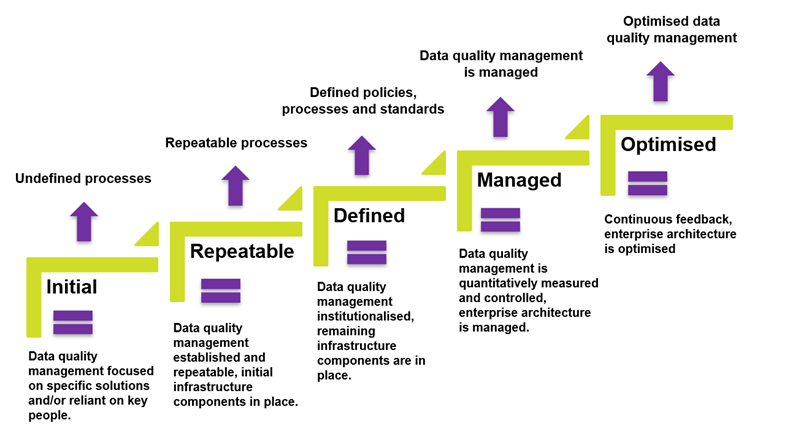

Based on our experience working with government we see different levels of data quality management maturity. The below diagram represents a common pattern of data quality management maturity, which starts at the first step – “initialisation” and moves up through the levels to ”optimisation”.

Data quality management maturity patterns across government

Our Rules-Based Approach to Improving Data Quality Across Government

“Rules create order out of chaos”.

To live and function in a society, we must have rules we mostly all agree upon. Sometimes these rules are informal rules, like the ones we could have at home and in the office. Breaking these rules may have consequences. Just imagine what life would be like without any rules, things could get chaotic and dangerous. This is also true for data. Rules provide order for measuring, understanding and improving data quality.

Our approach to data quality

Based on our knowledge, skills and experiences the diagram below illustrates our approach to data quality management. Our framework is iterative, running in sprints, where we focus on the goal of improving the quality of data. We have evolved this framework from our experiences in data-driven government projects, and we look to enable collaboration and provide feedback as quickly as possible while eliminating wasted time.

- Define the quality mission that we are on and test it by assessing the impact of poor data on business performance.

- Define data quality business rules and targets

- Assess the data against those business rules to gather metrics and data quality facts

- Design a quality improvement processes that remediates existing process issues or introduce new processes

- Move data quality improvement methods and processes into production

These stages come together and can be described as a Data Quality Management Hub consisting of people, processes, and technology.

These stages come together and can be described as a Data Quality Management Hub consisting of people, processes, and technology.

Careful management is key to unlocking the value of data. With huge volumes flowing through today’s data-rich government organisations, governance is an ongoing challenge.

Our pioneering rules-based approach uses automation to give you power over your data, putting you in control so that you can be confident it is current, complete, consistent and compliant. With us, the rules are written to match your requirements, by you, in collaboration with our experts or for you managed within a central rules catalogue, shared across the enterprise and easily applied to any GIS platform.

Rules are used for two things:

- Validation -To validate the data by measuring quality levels and identifying the specific problems within it.

- Enhancement - To enhance the data such as fixing the problems, synchronising and integrating different sources of data, inferring missing data or transforming it to a more useful and valuable form.

You create a rules catalogue (or adapt an existing one) to meet your specific data requirements and automate the data management process. These rules form the basis of a data quality management system for auditing, measuring and improving existing data as well as enabling a data delivery gateway for data flowing into your systems. In this way not only is existing data improved but incoming data can be rejected or rerouted if it does not meet the quality requirements defined by the rules.

As a result, our rules-based approach provides automated yet flexible data management processes that can be shared and are repeatable across enterprises and different platforms, and always applied consistently and objectively.

The Government Data Quality Framework outlines how action plans will help organisations to identify and understand the strengths and limitations of critical data. Action plans should set out data quality rules for how data and should align with user needs and business objectives. It is initiatives like this that have helped guide our approach at 1Spatial.

Developing and executing machine-readable data validation using the precision of 1Integrate

Data quality rules are first generally conceptualised and described, in your rules or data catalogue. 1Integrate enables the development of executable machine-readable data validation rules, in a no-code environment. Doing this without coding allows a range of different users, with different skills, to help define and modify the rules.

1Integrate is data agnostic and can access all sorts of different types of data, stored in different formats, which then enables users to automatically run their validation rules against their data from a single environment.

1Integrate allows you to ensure that data has undergone quality checks, so that you can be confident that tests have been performed on the fitness and consistency of your data in an automated system. Furthermore, it enables data stewards to define, modify and configure the definitions of data quality rules easily in the no-code environment and enforce what has been defined in the data itself.

1Integrate can also be used as a data integration platform. Recent rapid developments in Artificial Intelligence (AI) has made the software more powerful, extending it from a rules-based, knowledge-engineering and expert system, to taking advantage of new AI techniques like Machine Learning. Additional connections to new data sources and APIs (like Google Big Query, WFS like the OS Data Hub) allows us to cleanse, sync, update, and analyse data to solve your data integration problems.

Experiences across government using 1Integrate for improved data quality

1Integrate is helping enable government organisations to create and maintain authoritative and actionable information, benefiting our economy, environment, and society. Authoritative and actionable information is needed to undertake “rear view mirror” assessments, drive changes in the here and now, and shape the future. The adoption of rules is accelerating, improving confidence in data and in turn enabling more effective decision making.

Here are some examples of our experiences using 1Integrate to improve data quality for government organisations.

Geospatial data is crucial for the timely and accurate payment of agricultural and environmental subsidies. Rural Payment Agency’s Land Management System is at the heart of this. 1Spatial’s knowledgeable and creative approach is helping data needs by applying 1Integrate’s rules for data validation. This helps the agency to keep their data quality high and allowed them to build robust processes for updating their land register.

Environment Agency’s Spatial Data Infrastructure supports the management of risks of flooding from main rivers, reservoirs, estuaries, and the sea by planning, designing, constructing, and operating assets. Improving the quality of asset data the Environment Agency receives from suppliers and improving the timeliness of data made available to business users is critical. 1Spatial is enabling the Environment Agency to validate asset data from its suppliers using business rules and 1Integrate. Environment Agency are demonstrating how, by defining data requirements and rules in a machine-readable format, data can be generated at source by suppliers, submitted, verified, and validated before being formally accepted into the central database.

Find out more

If you would like to find out more about how we work to improve data quality by adopting rules and 1Integrate, download our little book on data quality. Alternatively, if you have a question for one of our experts, do not hesitate to get in touch.