National Trust

Automated data validation streamlines National Trust’s grant process

The National Trust was founded in 1895 to preserve the national heritage and open spaces of England, Wales and Northern Ireland (there is a separate National Trust for Scotland). Today it is the largest membership charity in the UK with 4.5 million members.

The National Trust owns 250,000 hectares of land and 775 miles of coastline. It also protects and opens to the public over 350 historic houses, 284 gardens and 1357 scheduled ancient monuments. The charity

relies on a number of sources for its income, including membership subscriptions, donations and legacies. Grants received from a range of statutory bodies are also an important source of funding.

Chris Cawser is Conservation Core Data Lead, responsible for geospatial data relating to the Trust’s land and properties. The National Trust recently migrated to Esri’s ArcGIS® platform with all geospatial data being stored in an Oracle® database.

"It’s invaluable to get input from those who know the lie of the land."

Expediting data collection for Agricultural Grant application

As the owner of large areas of rural land, the National Trust is eligible for agricultural grants worth approximately £10 million per year. This money, applied for annually from the Rural Payments Agency (RPA), is used to support a diverse range of conservation projects including protecting rare habitats and species, heritage features, beautiful landscapes and improving access, as well as supporting sustainable farming.

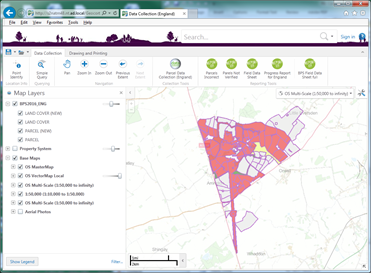

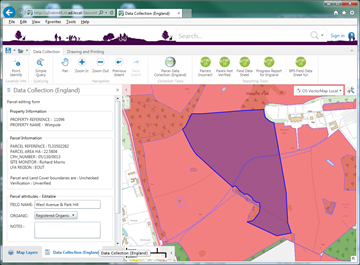

The application process requires the Trust to validate information through a framework provided by the RPA. The Trust must check its application to ensure records reflect current land uses. For England alone, there are 9,000 separate land parcels (subdivided into 15,000 land-use areas) to be surveyed, validated and updated. This work is the responsibility of 150 Site Monitors, often local rangers or others familiar with their areas. As Chris explains, “It’s invaluable to get input from those who know the lie of the land.” Where the dimensions of a parcel have changed, either through fences being removed or erected to open or sub-divide a land parcel, the records must be updated. Once the framework is correct, the current land-use must be recorded – e.g. permanent grassland, scrubland or forestry etc. – and its eligibility for grant funding assessed. For example, a two-hectare parcel might be grant-eligible as permanent grassland, but 0.5 hectares of the land might be used as an overflow carpark and thus ineligible. There are stiff penalties for misreporting land use so accuracy is critically important for the Trust.

In the past, the process of surveying, validating and collating grant data has been onerous and time-consuming. Site monitors were required to collect data on standalone solutions such as spreadsheets. These were then collated and validated. Only at that point, some weeks after the survey, would any queries come to light and site monitors be contacted to check and possibly re-survey an area. Spreadsheet and other solutions frequently involved large amounts of free text that was subject to error and misinterpretation.

Automating data validation at point of collection

When the National Trust migrated to ArcGIS, 1Spatial supplied Geocortex Essentials, part of the VertiGIS Studio suite of products, as a solution for creating custom applications on top of the ArcGIS platform. Chris began to explore how Geocortex Essentials could help make the data collection exercise more efficient. With support from 1Spatial’s consultants, he created a solution using the Workflow capability within VertiGIS Studio.

“VertiGIS Studio is really good for getting an application built and deployed quickly,” says Chris. “For more complex workflows, we’ve had 1Spatial come in for consultancy days. They’re great at solving problems and giving us the building blocks to re-use in other projects. It’s a very collaborative approach.”

"For more complex workflows, we’ve had 1Spatial come in for consultancy days. They’re great at solving problems and giving us the building blocks to re-use in other projects. It’s a very collaborative approach."

The data collection solution that Chris developed allows site monitors to enter data directly using their web browser. The automated workflow runs validation checks as data is being entered to ensure that each entry complies with required data standards and expectations. Chris explains, With VertiGIS Studio, we effectively front-load the data validation, using scripts to run error-checks before data entries are accepted.”

"With VertiGIS Studio, we effectively front-load the data validation, using scripts to run error-checks before data entries are accepted."

Reducing error risk and validation queries

Most errors or discrepancies are now captured at the point of entry by the site monitor who is best placed to resolve the issue. As a result, site monitors receive far fewer queries after the event and this minimises the need for any re-surveying.

“We are placing total control of data verification in the hands of the staff who have the knowledge about what is being collected,” says Chris. “The whole data collection experience has improved massively for site monitors and it’s been really positively received. We now avoid the ‘Are you sure this is correct?’ calls which saves everyone’s time and reduces frustration.”

Avoiding lengthy, post-collection validation

Automating data validation at the point of entry avoids the need for lengthy checking before the data is submitted to RPA. “Last year, pulling together and validating all the data we needed to submit, took me six weeks,” Chris says. “This year, we spent just one week creating scripts to output the data and verifying the results. Next year, even that week should be reduced, because we have everything set up. It’s a massive saving in time.”

Chris plans to spend the time saved, applying his new VertiGIS Studio skills to a list of other projects: “This has been our first use of VertiGIS Studio for a major exercise like this. Already, I can see where we can re-use this knowledge in other areas.”

"This year, we spent just one week creating scripts to output the data and verifying the results. Next year, even that week should be reduced, because we have everything set up. It’s a massive saving in time."