Location (Geospatial) Master Data Management: An Overview

Location (Geospatial) Master Data Management: An Overview

As a society we have been using maps for thousands of years to help make decisions - whether it’s for military intelligence or simply for taking a journey from A to B.

1. Predictions and modelling based on spatial data

Over the last 30 years these maps have become digitised. These digital maps are now being used in numerous ways to make predictions and analyse trends – either on its own or in combination of other data sources.

In the world of digital maps, understanding the accuracy of the underlying data is important for decision making.

For example, for a journey from A to B, if there is an error or gap in the road location data, it will give rise to a non-optimal routes being proposed and then taken.

2. Data combined from numerous sources

Geospatial data can often be held in disparate databases, formats and systems. Often, this data is incomplete, outdated or even completely missing.

To maximise the value of geospatial data, it is often used in combination with other data, such as data from other systems, third parties, or open data, because the most powerful analysis comes from combining two or more datasets together.

The data is often held in spreadsheets, databases or within specific software and made available via APIs – which allow software components to talk to each other automatically.

It is typically text, numbers and dates, but data often has an implicit location, for example an address that equates to a location in the real world.

3. Spatial data structures

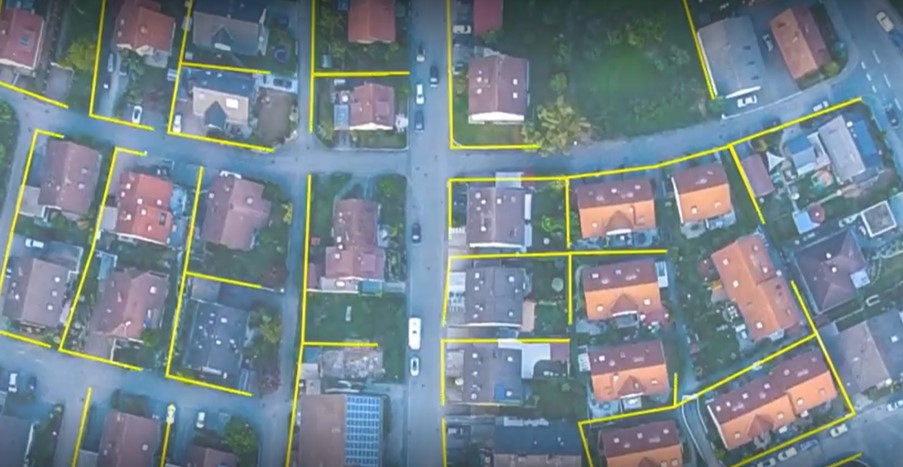

But spatial data is more than just addresses and is more complex to manage than spreadsheet data. It is used to represent people, places and physical things – for example buildings, utility networks, forests or rivers - but also virtual assets - such as airspaces or land ownership.

Raw spatial data is not always in a useful 'structure' - for example aerial imagery or point clouds or terrain meshes are of limited use for automatic analysis or operational decisions until they are turned into structured data in the form of points, lines, polygons or solids.

Structured spatial data opens the door to very powerful automatic analysis and allows insights that are not possible using only traditional non-location data – an example would be identifying if the billing address of a water utility customer matches the location of their water meter.

If not, then it might mean customers are being sent the wrong bill, or not being billed at all. Operational problems from incomplete or mismatching data is a common problem, especially when data is in different systems or from different sources.

Let's take a real life-and-death example: the next generation of 911 emergency services in the USA, to illustrate a simple example of data quality problems.

Free Download: The Little Book on Spatial Data Management

4. Errors with regional boundaries

When a 911 call is made in the US, your location is used to carefully route you to the right people to help you, by checking what geographical region (or polygon) you are in. These regions are called Public Safety Answering Points (PSAPs). But if the PSAP region polygons are wrong then the call handling process might detect no PSAPs if there is a gap between them, or more than one PSAP if they overlap.

This would mean that the call is sent to the wrong dispatch centre, or in the worst case, none at all.

5. Incorrect positioning of address points

In addition, the location of each landline phone number is associated with an address point, to correctly pinpoint the location of landline callers. These address points need to be correctly positioned to ensure that they are in the correct PSAP.

In order to check for accuracy, the address points can be cross-checked against valid address range information that is available from the Department of Transport.

If the address doesn't match the street number range, then that indicates a problem with the location of the address point.

Getting this data correct is vital for identifying the location of the caller and allocating the correct PSAP to handle the call.

No-code rules-based data improvements

1Spatial's 1Integrate rules engine lets organisations define “no-code” rules for automatically detecting these problems and, where necessary, correcting the data.

We use our 1Spatial patented software to audit and automatically correct location data, keeping it accurate and up to date.

Our technology can automatically validate, cleanse, enhance and transform the customer's spatial and non-spatial data that may exist in many different data sources. This can produce real operational improvements which save time, money, lives or can increase efficiency or reduce carbon emissions.

We can solve problems that range from small volumes of simple data, through to large volumes of complex data, by using out automated tools to flexibly scale to ensure your workflows are resiliient and won’t be impacted as you expand.

No-code rules development means that rules are created and managed in a user interface by data experts rather than development teams, providing a flexible and easily manageable set of rules that can be enhanced over time to meet the needs of your ever evolving business.

The rules provide repeatable and traceable automation logic that can be stored and shared rather than locked away in software or people's heads.

Watch video: how 1Integrate works

Data complexities and inefficiencies

As mentioned before, spatial data is usually collected from thousands of different sources and that can lead to complexity and inefficiency.

Having to collect this data is difficult - it often ends up being sent in an uncontrolled, unauditable or unsecure method such as email or FTP.

1Spatial's 1Data Gateway product provides an easy-to-use portal that allows data providers to upload their data which is then passed to the 1Integrate rules engine to automatically check it for quality and consistency. This is where the high-performance spatial cross-referencing of the rules engine is critical to support the processing of large amounts of data from many different sources.

If the data is not of a high enough quality, then it is rejected with a detailed list of reasons why not. If it is accepted than it is automatically syncronised and updated into a central data store.

Data dashboard views

1Data Gateway records each upload and its associated data quality which can then be viewed in a secure dashboard.

Data uploaders can see only their own upload and quality statistics and administrators can see the statistics across the whole project - this is useful to assess data suppliers, ensure that they are meeting their obligations and identify which rules cause the most problems – those rules might indicate upstream supply chain or software issues and therefore is a target for process optimisation.

Location Master Data Management

The process of validating, cleaning and aggregating data is often called ‘Master Data Management’. There are several MDM tools available, but they don’t handle location data – 1Spatial tools handle both location and non-location data together.

Location Master Data Management (LMDM) ensures that your data management process is automated and repeatable across your enterprise and different technology platforms. By auditing and cleansing, synchronising, updating and analysing different location data sets, it delivers significant cost and time savings, and crucially, data that you can trust and rely upon. It also prepares your data for system replacement and consolidation if needed. Unlike traditional MDM, LMDM provides you with the right location-enabled technology to meet your unique needs for spatial and non-spatial data.

What sets 1Spatial apart from traditional GIS software providers

Geospatial data CAN be handled by traditional GIS software or some database tools, but there are some exceptions – which is where 1Spatial’s technology can help:

- No need for centralisation: Traditional software providers can typically only handle data that has already been centralised into one environment. 1Spatial tools can check and cross-reference the data from different sources and without needing them to be pre-loaded into a central system.

- No-code rules development - Other systems don't provide no-code rules development and often require queries or code to be written by specialist developers.

- Scale and complexity of data - Other systems can struggle with the performance of large amounts of complex cross-referencing needed between the data records.

- Cloud-based data upload portal - Other systems don’t provide an out-of-the box, secure, multi-tenancy data upload portal as a way to aggregate data and automatically apply rules.

1Spatial: a leading geospatial management solution provider

1Spatial tools help to clean and aggregate data which is then used to run business processes and feed business applications. All business applications benefit from the better data, and these could be third party applications such as the 911 automatic call routing system – but also business that 1Spatial creates for use in government utilities and transport.

For example, for the State of Michigan in the US we are using our technology to help check and correct data from over 300 different cities and counties to create a State-wide digital map to deliver public services to the State.

We have also helped the US Census Bureau reduce the number of field canvassers required for the US census by 75 % and helped bring about a cost saving of $5 billion.

Similarly, we helped Northumbrian Water save more than £8 million and 3 years of work by accurately mapping its combined water assets using available but incomplete and outdated data. We were able to do this by inferring the missing information with our powerful rules-based technology.