How to Deliver Continuous Data Quality Improvement

Author: Bob Chell, CPO -1Spatial

We know data quality is important, and that ignoring it can be expensive and time consuming. However, we often find ourselves in the reverse scenario, especially without a clearly determined goal. Too many initiatives are planned and run as one-off exercises. Many run aground by targeting the most difficult problems, rather than considering where the return will be largest.

We find that successful projects are run in accordance with six data excellence principles.

We follow methodologies for agile data consultancy which have evolved through our experiences of many similar, data-driven projects. These enable collaboration and provide feedback as quickly as possible while eliminating wasted time.

Six data quality excellence principles

1. Embrace automation

2. Ensure repeatability and traceability

3. Design simple solutions to difficult scenarios and avoid unnecessary technical complexity

4. Target the typical, not the exceptional, in order to maximise value

5. Adopt an evidence-based decision-making process to create business confidence in the outcome

6. Collaborate to identify issues and work towards a solution

The key factors that underpin a robust data quality approach:

- Establish and document the standard to which you need your data to conform, then perform an initial assessment to determine what needs to be done to achieve this standard.

- Once you have decided on your target, put in place processes that will validate the data to determine every non-conformance. Apply manual or, where appropriate, automated fixes to the data, then re-validate to confirm conformance and to allow adoption of the data.

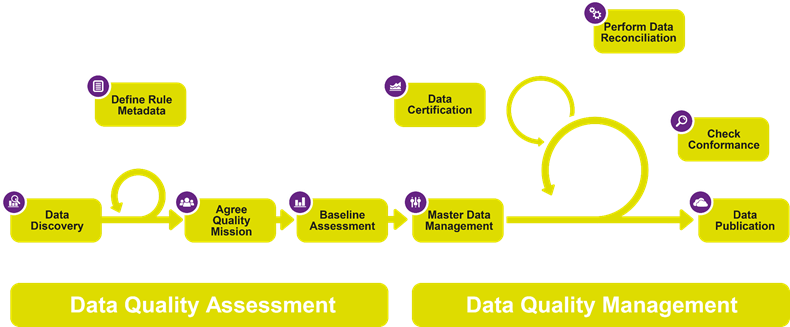

A 2-stage data quality approach:

1. Data Quality Assessment

This is the process whereby the current data condition is assessed and the target data quality is defined.

- Data Discovery

Identify all of the participating datasets and sources and any implicit or explicit relationships between them. - Agree Quality Mission

Determine the target data quality measures. This can include both spatial and non-spatial data constraints and any combination of these. - Define Rule Metadata

This is how the Quality Mission metrics are formulated as logic rules that can be applied to the source data to determine its fitness-for-purpose. The rules are defined for objects in their context so that the relationships between features, both spatial and non-spatial, can be assessed. - Baseline Assessment

The application of the defined rules to the source data using 1Validate producing a detailed non-conformance report with quantified levels of compliance across the various rules.

2. Data Quality Management

This is the process whereby data that is maintained in a master repository with ongoing operational updates and revisions is automatically validated, and if necessary repaired, before it is released as an authoritative product to stakeholders.

- Master Data Management

This is where the master data is maintained and updated using whatever business processes and technologies are best suited to an organisation’s needs. If 1Spatial Management Suite is utilised then the data quality process is automatically enabled as it is an integral element of the flowline. If an alternative data management solution is utilised then the our technology components 1Validate and 1Integrate are readily incorporated into the workflow to ensure that the required data quality standards are met. - Check Conformance

The rule metadata defined in the Data Quality Assessment is applied to the complete master data holding, or just to data that has been tracked as changed, using 1Validate. Any non-conformances are recorded and made available for efficient automated or manual data correction or reconciliation. - Perform Data Reconciliation

Any identified non-conformances need to be addressed through Data Reconciliation before the data can be approved for publication. The process of reconciliation can be fully automated using the rules-based facilities in 1Integrate or it can be done using manual editing processes. Where manual editing processes are utilised they are significantly enhanced through the use of the spatial non-conformance reports generated by 1Validate. These don’t just identify a feature failing a validation rule but identify precisely where on that feature the spatial non-conformance has occurred. - Data Certification

Once data has passed all the required validation tests it is certified as being in compliance with the stated Quality Mission and is made available for publication to stakeholders who can now adopt this data with confidence. - Data Publication

The data that has been certified as being in conformance with the agreed quality measures is now ready for publication. This process may be as simple as releasing it for distribution to stakeholders or it may entail automated processes where the approved changes to the data are propagated into specific products (vector or raster).

The 1Spatial Platform enables this comprehensive approach to delivering data quality. The software components in the 1Spatial Platform are available in the cloud, but they can also be utilised in a standalone mode as part of any data management workflow.

Free Guide: How to Improve Spatial Data Quality

In our free guide on Spatial Data Quality, we look at how ensuring data quality is critical and how organisations are beginning to treat this as an ongoing process by deploying solutions that automate their data quality and data management procedures.

Discover:

- The cost of poor data and how to ensure a return on your digital investment

- How to realise the spatial data opportunity

- Important considerations for managing data quality

- The process to follow for data improvement

- 6 data excellence principles

Download now

The 1Spatial Platform supports:

- Data profiling, to provide both an evaluation of your current state of data quality and measurement of it over time. You can generate informative reports and share key data quality metrics with your team – the first step in solving data quality issues.

- Reporting, by providing report generation where data stewards can leverage the data profiling results to create predefined reports that watch for violation of data quality thresholds.

- The delivery of customized, key quality indicators where teams can collaborate on the process of improving data quality across the enterprise. This opens up the issue of data quality to a broader audience in your organization, fostering positive change around the way the client company manages data.

- The use of internal or external reference data to set the standard for your data. Combine multiple data sources into your

- The integration and interoperability with your existing technology stack – Esri, Oracle, SAP, open source.

What we have allows us to build simple elegant solutions. We have worked hard to productise innovative ways to remove waste in the process and use data smarter.

Our core principles are embedded in our products and allow you to be:

1. Agile

Knowledge is built into the system, so you don’t have to start from scratch every time you need to cleanse data or get new functions of the business to contribute. You can develop and test ideas and hypothesis in real time to ensure you are working as lean as you can.

2. Smart

Data quality is not just about saving money – though there is a tangible payback when you eliminate inaccuracy and duplication for your information systems. Any effective data solution should allow you to develop an initial assessment of your data quality, which will help you put in place an effective plan for the management and continuous monitoring and improvement of it.

3. Innovative

It’s about creating new opportunities by harmonising the data from disparate systems and providing technologies, executives, and business teams with quality data. When you trust the data it motivates you to harness the information in new ways, giving rise to fresh ideas and inspiration.

4. Approachable

If you know what a broken item looks like and you know what a fixed item is supposed to look like, you can design and develop processes; train workers and automate solutions to solve real business problems. Tools to capture business rules allow you to work in collaboration with all stakeholders across your business.

About 1Spatial

Our core business is in making geospatially-referenced data current, accessible, easily shared and trusted. We have over 30 years of experience as a global expert; uniquely focused on the modelling, processing, transformation, management, interoperability, and maintenance of spatial data – all with an emphasis on data integrity, accuracy and on-going quality assurance.

We have provided spatial data management and production solutions to a wide range of international mapping and cadastral agencies, government, utilities and defence organisations across the world. This gives us unique experience in working with a plethora of data (features, formats, structure, complexity, lifecycle, etc.) within an extensive range of enterprise-level system architectures.

Speak to an Expert

We can help you develop a data quality improvement programme tailored to your needs.

Get in touch